In this quick tutorial I describe how to open up multiple ssh connections to the same host while entering the password for only the first session.

To do this, create a file named config in the .ssh sub-directory under the home directory of the user & populate it with the following contents:

Here's the breakdown of the text in the file:

Host * (let this be valid for all hosts to which connections are initiated. You can specify a single host or domain or a network).

ControlMaster auto (set the control master to auto)

ControlPath ~/.ssh/master-%r@%h:%p (This specifies the path to the control socket used for connection sharing. %r denotes the remote login name, %h denotes the destination hostname & %p denotes the port number used which is port 22 by default).

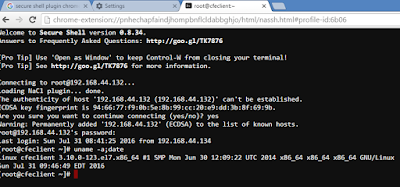

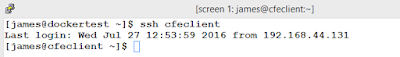

To test it out, open up a ssh connection to a host. You'll be prompted for a password. Now open up another connection to the same host. This time there won't be any password prompt:

The answer to how a passwordless authentication works after the first login lies in the socket file in ~/.ssh directory.

When we did the first login a socket file got created which stored the credentials of the user. So for subsequent logins to the same host the credentials get picked from the socket file.

do note that the passwordless authentication lasts only until the first session or the master session is open.

To check if the master connection is open type:

To do this, create a file named config in the .ssh sub-directory under the home directory of the user & populate it with the following contents:

Here's the breakdown of the text in the file:

Host * (let this be valid for all hosts to which connections are initiated. You can specify a single host or domain or a network).

ControlMaster auto (set the control master to auto)

ControlPath ~/.ssh/master-%r@%h:%p (This specifies the path to the control socket used for connection sharing. %r denotes the remote login name, %h denotes the destination hostname & %p denotes the port number used which is port 22 by default).

To test it out, open up a ssh connection to a host. You'll be prompted for a password. Now open up another connection to the same host. This time there won't be any password prompt:

The answer to how a passwordless authentication works after the first login lies in the socket file in ~/.ssh directory.

When we did the first login a socket file got created which stored the credentials of the user. So for subsequent logins to the same host the credentials get picked from the socket file.

do note that the passwordless authentication lasts only until the first session or the master session is open.

To check if the master connection is open type:

This type of multiplexed connection set up can be very useful in situations when we need to access a system over & over but we don't have passwordless authentication set up.